What is Robust Intelligence?

Welcome to Robust Intelligence, the solution for maintaining AI integrity. The platform helps you identify and eliminate the risks inherent to production AI by integrating into your AI pipeline.

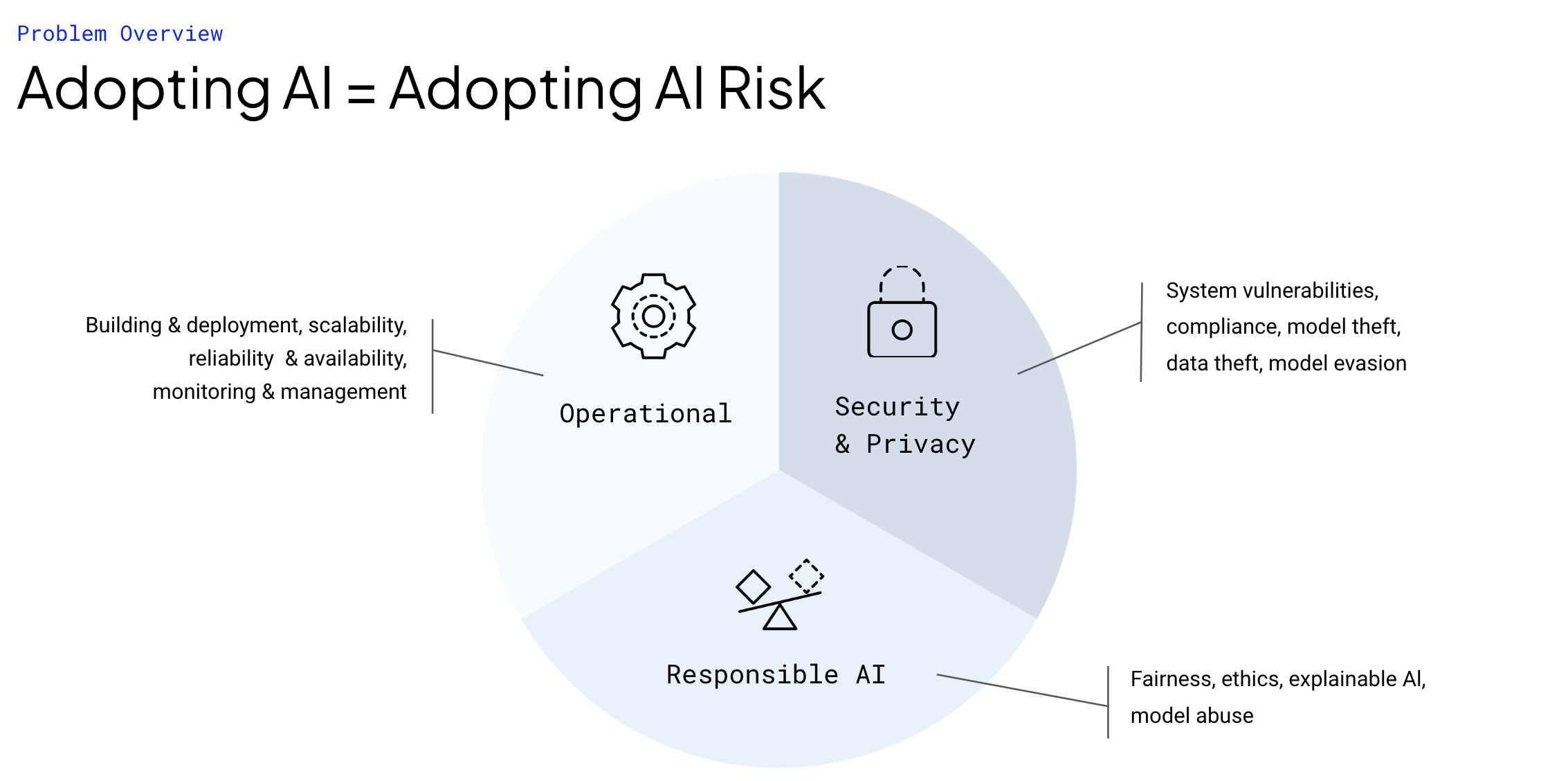

What is AI Risk?

AI risk refers to the potential negative consequences of using AI models in production, including operational, ethical, and security risks.

Security risks occur when models are vulnerable to adversarial actors who try to exploit or compromise a machine learning model’s performance. Proprietary and third-party models may be susceptible to data leakage and adversarial attacks, including system breaches, model evasion, and theft. All models must be regularly screened before and during use to protect against a wide array of evolving vulnerabilities and threats.

Ethical risks stem from model behavior that violates norms, laws, regulations, or other governance standards. AI models can have or develop a variety of ethical issues, including bias, fairness, and use of offensive language. This can lead to inequality, reputational damage, and lawsuits.

Operational risks can arise from subpar model performance. Changes in data can lead to model failures including performance drift, corruption, and broken data pipelines. These failures can affect an organization’s operations in terms of lost revenue, lost trust, damage to the organization’s reputation, and resource constraints.

Why We Test

Why We Run Stress Tests

Stress Testing measures the robustness of your AI model before you deploy it. Test results identify security, ethical, and operational risks and weaknesses in your model and provide you with insights on how to improve it before deployment.

Scheduled Stress Testing to Detect Emerging Risks

Scheduled Stress Testing continually validates AI models that are deployed in production in order to detect when vulnerabilities or failures emerge, such as data drift or inability to withstand adversarial attacks.

Risk Testing Built into Your AI Pipeline

Robust Intelligence allows you to test your models for security, ethical, and operational risk by integrating into the following phases of your model development and deployment pipeline:

During model development, AI Stress Testing measures the robustness of your model by running dozens of pre-configured tests. Each test checks the model’s vulnerability to a specific form of potential failure in production.

Once models are deployed into production, set up Scheduled Stress Testing to automatically alert you to issues such as data drift and detected adversarial attacks.

Measuring AI Risk

In modern engineering organizations, data scientists and machine learning engineers typically invest a significant amount of effort into the development stage of the model life cycle, which includes tasks such as data ingestion and cleaning, feature extraction, and model training. During this stage, models are evaluated mainly based on their performance on clean test data.

While these metrics may be useful in controlled development environments, deploying models in production introduces a new set of challenges and risks that are often overlooked. Once a model is deployed, data scientists lose control over how the model is used and how data is passed into it. Moreover, they have no oversight over the data pipelines that incorporate the model. Even when the model is used correctly, the real world can change, leading to issues like distributional shifts in production data, which can silently degrade model performance. These risks can manifest in several ways, such as operational, ethical, and security risks. Operational risks arise from subpar model performance, which may occur due to factors such as data drift or other distributional shifts in the production environment. Ethical risks occur when model behavior violates regulatory or governance standards, which may lead to legal or reputational harm for the organization. Finally, security risks arise when models are vulnerable to adversarial actors who can exploit or compromise the model’s performance.

To mitigate these risks, it’s important to test models continually throughout their lifecycle, during development and after deployment. This ensures that you catch any issues as they arise and can take corrective action before they cause significant problems.

Key Machine Learning Tasks Covered

Robust Intelligence provides model testing across the following broad task categories:

Generative

Question Answering

Tabular

Binary Classification

Multiclass Classification

Regression

Learning to Rank

Natural Language Processing (NLP)

Text Classification

Named Entity Recognition

Natural Language Inference

Fill-Mask Modeling

Computer Vision (CV)

Image Classification

Object Detection

Stress Testing

Stress Testing is a comprehensive set of tests that measure the robustness of your AI model before deployment. These tests are designed to identify potential weaknesses and failure modes of your prospective AI deployment, and each test represents a specific axis of potential model vulnerability.

Scheduled Stress Testing allows you to automatically validate AI models without human intervention during production deployment at regular time-intervals such as hourly, daily, or weekly.

The Stress Testing framework comes with a wide range of pre-configured tests, from simple operational risk tests that measure whether model performance is sufficiently high, to complex security tests that identify signatures that are evidence of adversarial attacks. By running hundreds of these tests across both your model and associated datasets, Robust Intelligence can identify potential vulnerabilities in your model, and provide you with insights on how to improve its robustness.

During the development stage of your model’s lifecycle, the Stress Testing framework can help you ensure that your model is performing optimally, across all risk categories. By analyzing individual tests, you can identify areas where your model needs improvement, and refine it accordingly. By identifying these issues early on in the development process, you can address them proactively and ensure that your model is compliant with applicable regulatory or governance standards.

The Stress Testing framework is a powerful tool for measuring the robustness of your AI model before deployment. Its pre-configured tests cover a wide range of potential vulnerabilities, allowing you to identify areas where your model needs improvement, and address them proactively.

Robust Intelligence Inputs

To run stress tests, the platform takes as input:

Data: Robust Intelligence requires sample data in the form of a reference dataset and an evaluation dataset:

the reference set contains clean training data

the evaluation set contains a held-out dataset that the model will encounter in production

These datasets need to be registered with the RI Data Registry before you use them, and they can optionally have ground-truth labels and associated model predictions (which should be registered with the Prediction Registry. Having this additional information allows the testing suite to run more tests than is possible otherwise.

Model: Providing access to the model allows for testing the model behavior under different circumstances. The tests perturb model inputs, provide them to the model, and examine the model behavior to uncover its vulnerabilities. The model is treated as a black box, and providing access to the model entails registering a

model.pyfile with the RI Model Registry. Themodel.pyfile requires a prediction function that takes in an input from Robust Intelligence and provides the model prediction given this input. By treating the model as a black box, Robust Intelligence can easily integrate with whatever model framework you use.

AI Compliance Management with Model Cards

Once you’ve run stress tests, Robust Intelligence lets you download auto-generated model cards for internal and external documentation needs. These reports help your organization comply with AI regulatory standards and maintain a record of test results.

Workspace Overview

A Robust Intelligence workspace is a single pane of glass that provides visibility into all models in production, providing model health status and the ability to track models to any custom metric, which is especially useful for AI leadership in organizations.